Contents

Deploying Kubernetes on Bare Metal Server

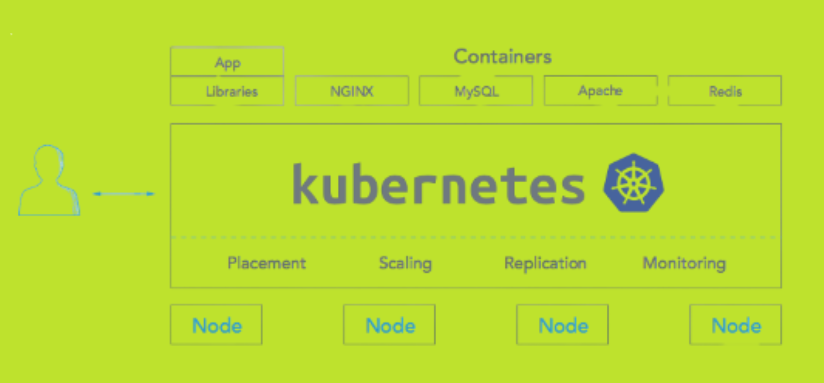

Kubernetes orchestrates computing, networking, and storage infrastructure provides workloads that can be used for the storage purpose. Since 2014, Kubernetes has become an attraction for the users that have large volumes to store. It is preferred for the four main reasons:

- Provides a container platform

- Acts as a workspace for micro services

- Provides an easily operable cloud platform

- Provides a physical independent space for setting communication with other platforms

Does Kubernetes run on bare metal? At present, you can set up the configuration using VMware, Cloud setup, or using a physical bare-metal server. In this article you’ll know more about how to install kubernetes on bare metal server in detail.

In our previous article we have discussed about how to setup dynamic nfs provisioning server for kubernetes. Before we start, let’s remember some of the facts:

- Kube proxy that runs on each node in the cluster must maintain ultra-low latency for containers. Physical containers like bare metal server help in achieving this

- The Bare metal server has shown a high-performance as compared to working with VMs

- Physical setup of high-end machines is required to such a high-end arrangement much more difficult as compared to AWS or GCP

- A less resilient system that does not afford going down.

Let’s dive into the details and learn the procedure to make a physical setup of bare metal servers with Kubernetes

Table of Contents

- Why Deploy or Install Kubernetes on Bare Metal Server?

- Why will it not always match your needs?

- Benefits of Kubernetes with bare-metal infrastructure

- Step by Step Process to Setup Kubernetes on a Bare Metal Server

- Challenges of Using Kubernetes with Bare Metal Servers

- Conclusion

Why Deploy or Install Kubernetes on Bare Metal Server?

As mentioned above, the Kubernetes setup can be installed in three ways. Each model has a working method. The overall efficiency of the system is measured by evaluating the entire system on parameters such as the speed of availability, complexity, management overhead, cost, and operational involvement.

Benefits of Kubernetes with Bare Metal infrastructure

- Network deployment and operations are simplified through easier life cycle management. Since the virtualization layer is not part of the cloud stack, fewer manpower can handle the operations and management with safety measures. The simple infrastructure allows for time-saving troubleshooting that is required.

- With the elimination of the virtualization layer, the infrastructure overhead is drastically reduced. More compute and storage resources are now available for application deployments. So, the overall hardware efficiency increases helping in managing edge computing applications with resource constraints in remote sites.

- No software license fees are needed for the virtualization software, resulting in substantial cost savings.

- Improved application performance is measured since the guest operating system and virtual switches are removed with the virtualization layer.

- Automated life cycle management and the seamless introduction of CI/CD are some of the vital benefits of using this type of infrastructure. Since the number of layers decreases, end-to-end automation becomes easier.

- The new hardware acceleration technologies like smart NICs (Network Interface Cards) and support for GPUs (Graphics Processing Unit), make the applications of 5G possible to run smoothly.

Step by Step Process to Setup Kubernetes on a Bare Metal Server

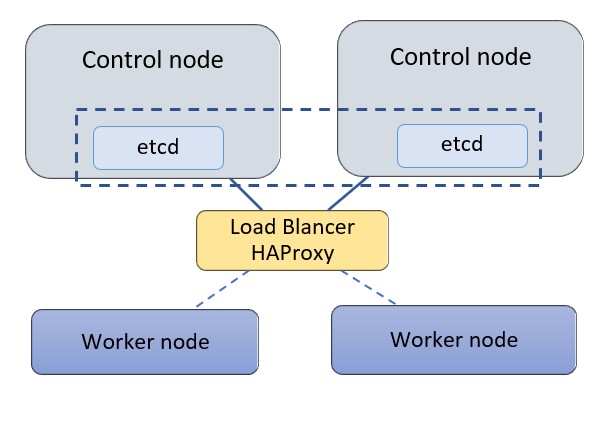

To know the step wise working of Kubernetes, we have taken a cluster of two control nodes and two worker nodes with a standalone Proxy load balancer (LB). Each node is a bare metal server.

In practical cases, three control nodes of the cluster are taken to form a quorum that works with the cluster state. Here we are considering two nodes to demonstrate the high availability setup.

Configurations Considered in the Example:

- Kubernetes 1.18.6 on bare-metal machines.

- Ubuntu 18.04

- API Server to manage cluster components

- Controller-manager

- Scheduler

- Etcd (used as the backend for service discovery and stores.Etcd can be deployed on its standalone nodes but for this exercise, we will keep etcd within the control nodes.)

- Standalone Node (used for Proxy to load balance traffic to the control nodes and support control plan redundancy.)

- A private network to communicate within the cluster. In our case, the network is 172.31.113.0/26

Steps Involved:

-

- Step 1: Configuring HAProxy as a Load Balancer

- Step 2: Install Docker and Related Packages on All Kubernetes Nodes

- Step 3: Prepare Kubernetes on All Nodes

- Step 4: Install the Key-Value Store “Etcd” on the First Control Node and Bring Up the Cluster

- Step 5: Add a Second Control Node (master2) to the Cluster

- Step 6: Add the Worker Nodes

Step 1: Configuring Proxy as a Load Balancer

To do this, consider a node which is a bare metal server in the example considered. The following IP addresses are taken in this example to make the steps easier to understand.

- #create a file with the list of IP addresses in the cluster

- cat > kubeiprc <<EOF

- export KBLB_ADDR=172.31.113.65

- export CTRL1_ADDR=172.31.113.48

- export CTRL2_ADDR=172.31.113.62

- export WORKER1_ADDR=172.31.113.46

- export WORKER2_ADDR=172.31.113.49

- EOF

- #add the IP addresses of the nodes as environment variables

- chmod +x kubeiprc

- source kubeiprc

Install the haproxy package:

apt-get update && apt-get upgrade && apt-get install -y haproxy

Update the proxy config file “/etc/haproxyhaproxy.cfg” as follows:

- ##backup the current file

- mv /etc/haproxy/haproxy.cfg{,.back}

- ## Edit the file

- cat > /etc/haproxy/haproxy.cfg << EOF

- global

- user haproxy

- group haproxy

- defaults

- mode http

- log global

- retries 2

- timeout connect 3000ms

- timeout server 5000ms

- timeout client 5000ms

- frontend kubernetes

- bind $KBLB_ADDR:6443

- option tcplog

- mode tcp

- default_backend kubernetes-master-nodes

- backend kubernetes-master-nodes

- mode tcp

- balance roundrobin

- option tcp-check

- server k8s-master-0 $CTRL1_ADDR:6443 check fall 3 rise 2

- server k8s-master-1 $CTRL2_ADDR:6443 check fall 3 rise 2

- EOF

To allow for failover, Proxy load balancer needs the ability to bind to an IP address that is nonlocal, meaning an address not assigned to a device on the local system. For that add the following configuration line to the sysctl.conf file:

- cat >> /etc/sysctl.conf <<EOF

- net.ipv4.ip_nonlocal_bind = 1

- EOF

Update the system parameters and start or restart Proxy:

- sysctl -p

- systemctl start haproxy

- systemctl restart haproxy

Check that the Proxy is working:

nc -v $KBLB_ADDR 6443

Step 2: Install Docker and Related Packages on All Kubernetes Nodes

Docker is used with Kubernetes as a container runtime to access images and execute applications within containers. We start by installing Docker on each node.

First, define the environment variables for the nodes IP addresses as described above

- cat > kubeiprc <<EOF

- export KBLB_ADDR=172.31.113.65

- export CTRL1_ADDR=172.31.113.48

- export CTRL2_ADDR=172.31.113.62

- export WORKER1_ADDR=172.31.113.46

- export WORKER2_ADDR=172.31.113.49

- EOF

- #add the IP addresses of the nodes as environment variables

- chmod +x kubeiprc

- source kubeiprc

Update the apt package index and install packages to allow apt to use a repository over HTTPS:

- apt-get update

- apt-get -y install \

- apt-transport-https \

- ca-certificates \

- curl \

- gnupg2 \

- software-properties-common

Add Docker’s official GPG key:

curl –fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add –

Add docker to APT repository:

- add-apt-repository \

- “deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable”

Install Docker CE:

apt-get update && apt-get install docker-ce

Next, confirm Docker is running:

Step 3: Prepare Kubernetes on All Nodes, Both Control and Worker Ones

Kubernetes components are now installed on the node. This step applies to both control and worker nodes. Install kubelet, kubeadm, kubectl packages from the Kubernetes repository.

Kubelet is the main component to run on all the machines in the cluster required to start pods and containers. To bootstrap, the cluster, a program,Kubeadm is used. Kubectl is the command-line utility to run commands to make use of the cluster.

Add the Google repository key to ensure software authenticity:

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add –

Add the Google repository to the default set:

- cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

- deb https://apt.kubernetes.io/ kubernetes-xenial main

- EOF

Update and install the main packages:

apt-get update && apt-get install -y kubelet kubeadm kubectl

To ensure the version of these packages matches the Kubernetes control plan to be installed and avoid unexpected behavior, we need to hold back packages and prevent them from being automatically updated:

apt-mark hold kubelet kubeadm kubectl

After the package install, disable swap, as Kubernetes is not designed to use SWAP memory:

- swapoff -a

- sed –i ‘/ swap / s/^/#/’ /etc/fstab

Verify kubeadm utility is installed:

kubeadm version

Change the settings such that both docker and kubelet use systemd as the “group” driver. Create the file /etc/docker/daemon.json as follows:

Repeat Steps 2 and 3 above for all Kubernetes nodes, both control and worker nodes.

Step 4: Install the Key-Value Store “Etcd” on the First Control Node and Bring Up the Cluster

It is now time to bring up the cluster, starting with the first control node at this step. On the first Control node, create a configuration file called kubeadm-config.yaml:

- cd /root

- cat > kubeadm-config.yaml << EOF

- apiVersion: kubeadm.k8s.io/v1beta1

- kind: ClusterConfiguration

- kubernetesVersion: stable

- apiServer:

- certSANs:

- – “$KBLB_ADDR”

- controlPlaneEndpoint: “$KBLB_ADDR:6443”

- etcd:

- local:

- endpoints:

- – https://$CTRL1_ADDR:2379

- – https://$CTRL2_ADDR:2379

- caFile: /etc/kubernetes/pki/etcd/ca.crt

- certFile: /etc/kubernetes/pki/apiserver-etcd-client.crt

- keyFile: /etc/kubernetes/pki/apiserver-etcd-client.key

- EOF

Initialize the Kubernetes control plane:

sudo kubeadm init –config=kubeadm-config.yaml –upload-certs

If all configuration goes well, a message similar to the one below is displayed.

Kubeadm commands are displayed for adding a control-plane node or worker nodes to the cluster. Save the last part of the output in a file as it would be needed later for adding nodes.

Please note that the certificate-key gives access to cluster sensitive data. As a safeguard, uploaded-certificates will be deleted in two hours.

On the configured control node, apply the Weave CNI (Container Network Interface) network plugin.

This plugin creates a virtual network that connects Docker containers deployed across multiple hosts as if the containers are linked to a big switch. This allows containerized applications to easily communicate with each other.

kubectl –kubeconfig /etc/kubernetes/admin.conf apply -f \

-

- “https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d ‘\n’)”

Check that the pods of system components are running:

- kubectl –kubeconfig /etc/kubernetes/admin.conf get pod -n kube-system -w

- kubectl –kubeconfig /etc/kubernetes/admin.conf get nodes

Step 5: Add a Second Control Node (master2) to the Cluster

As the first control node is running, we can now add a second control node using the “kubeadm join” command. To join the master2 node to the cluster, use the join command shown in the output at the initialization of the cluster control plane on control node 1.

Add the new control node to the cluster. Make sure to copy the join command from your actual output in the first control node initialization instead of the output example below:

- kubeadm join 172.31.113.50:6443 –token whp74t.cslbmfbgh34ok21a \

- –discovery-token-ca-cert-hash

- sha256:50d6b211323316fcb426c87ed8b604eccef0c1eff98d3a44f4febe13070488d2 \

- –control-plane –certificate-key

- 2ee6f911cba3bddf19159e7b43d52ed446367cb903dc9e5d9e7969abccb70a4b

The output confirms that the new node has joined the cluster.

The “certificate-key” flag of the “kubeadm join“ command causes the control plane certificates to be downloaded from the cluster “kubeadm-certs” store to generate the key files locally.

The security certificate expires and is deleted every two hours by default. In case of error, the certificate can be reloaded from the initial control node in the cluster with the command:

sudo kubeadm init phase upload-certs –upload-certs

After the certificates are reloaded, they get updated and the “kubeadm join” needs to be modified to use the right “certificate-key” parameter.

Check that nodes and pods on all control nodes are okay:

- kubectl —kubeconfig /etc/kubernetes/admin.conf get nodes

- kubectl –kubeconfig /etc/kubernetes/admin.conf get pod -n kube-system -w

Step 6: Add the Worker Nodes

Now worker nodes can be added to the Kubernetes cluster.

To add worker nodes, run the “kubeadm join” command in the worker node as shown in the output at the initialization of the cluster control plane on the first control node (example below, use the appropriate command saved from the controller build):

- kubeadm join 172.31.113.50:6443 –token whp74t.cslbmfbgh34ok21a \

- –discovery-token-ca-cert-hash

- sha256:50d6b211323316fcb426c87ed8b604eccef0c1eff98d3a44f4febe13070488d2

The output confirms the worker node is added.

Check the nodes that are in the cluster to confirm the addition:

kubectl –kubeconfig /etc/kubernetes/admin.conf get nodes

Now the Kubernetes cluster with two control nodes is set up and ready to operate

The cluster deployed can be extended in a similar way as described above with more control nodes or additional worker nodes. Another Proxy load balancer can be added for high availability.

Challenges of Using Kubernetes with Bare Metal Servers

For maintaining private security and to use advanced applications like AI, Kubernetes with bare metal servers are used. Now when you are using an on-premises setup, there are many challenges. Some of the common ones are:

Node Management – The first challenge is to manage the active nodes. To manage this, a node pool is used.

The new configured nodes can be seen there. Whenever an empty node is attached to the cluster, the details of the same are shown. In this case, maintaining security is the primary concern.

Cluster initialization – In a regular cluster setup, Kubernetes automatically manages, master and worker nodes in the cluster. Bare Metal servers allow administrators to make use of the “advanced mode” option to override and customize nodes.

This setup needs to use the Docker updated version, creating the etcd K/V store, establishing the flannel network layer, starting kubelet, and running the Kubernetes appropriate for the node’s role (master vs. worker). Now managing the entire setup individually becomes a challenge.

Containerized kubelet – kubelet’s need to mount directories containing secrets into containers to support the Service Accounts mechanism.

When Bare Metal Server is used, it becomes tricky and requires a complex sequence of steps that turn out to be fragile. Some other methods like a native method are required to run smooth operations.

Networking hurdles – For the smooth working of the Kubernetes cluster, many infrastructure services need to communicate across nodes using a variety of ports and protocols.

It is observed that the customer nodes block some or all the traffic required for ports. Hence the accessing of nodes becomes difficult. Sometimes if the working of the server is slow, it becomes a challenge to access the node.

Apart from these challenges, monitoring and maintaining security requires a vigilant approach when you are working with on-premise Bare Metal Server

Conclusion

The Kubernetes deployment demonstrated above used a cluster of bare metal servers. This setup provides a scope to connect with multiple sites at the same time using additional plugins.

As a result, you will be able to build a scalable distributed environment. You can optimize the inter-site connectivity by providing low latency for the better performance of Kubernetes clusters.

Finally, you can opt for the bare-metal server setup over a virtual machine set up to achieve better scalability and reliance on hardware accelerators. However, this setup will also help use applications such as machine learning and AI where direct access to the machine is required.

This shift will be the beginning of the third era of Kubernetes using hybrid IT environments. The instances of Kubernetes will be running on both virtual machines and bare-metal servers in near future.

We can conclude by saying that the number of IT organizations that will prefer to opt for bare-metal servers will most certainly increase. The process is going to be a labor-intensive method of creating a Kubernetes host infrastructure.

Another point of view before you go with the bare-metal server setup is to visualize your priorities and preferences.

If you want a turnkey Kubernetes service, a managed, cloud-based option, you must choose a service like AWS. If, on the other hand, you don’t mind the trouble of setting up a cluster yourself, on-premises Kubernetes using bare-metal servers will give you the best solution.

Also Read –

- 56 Marketing Tools + Resources To Increase Your Productivity

- Android Mobile App Development Roadmap 2024

- How To Become A Full Stack Developer In 2024?

- How To Become A Full Stack Designer In 2024?

- UX(User Experience) Design Process Stages

RedBlink is an AI consulting and generative AI development company, offering a range of services in the field of artificial intelligence. With their expertise in ChatGPT app development and machine learning development, they provide businesses with the ability to leverage advanced technologies for various applications. By hiring the skilled team of ChatGPT developers and machine learning engineers at RedBlink, businesses can unlock the potential of AI and enhance their operations with customized solutions tailored to their specific needs.

Director of Digital Marketing | NLP Entity SEO Specialist | Data Scientist | Growth Ninja

With more than 15 years of experience, Loveneet Singh is a seasoned digital marketing director, NLP entity SEO specialist, and data scientist. With a passion for all things Google, WordPress, SEO services, web development, and digital marketing, he brings a wealth of knowledge and expertise to every project. Loveneet’s commitment to creating people-first content that aligns with Google’s guidelines ensures that his articles provide a satisfying experience for readers. Stay updated with his insights and strategies to boost your online presence.